Split Testing - A/B Testing

Quantitative testing of two alternative designs to learn which better leads to a specific goal

Val Yonchev

What Is Split Testing - A/B Testing?

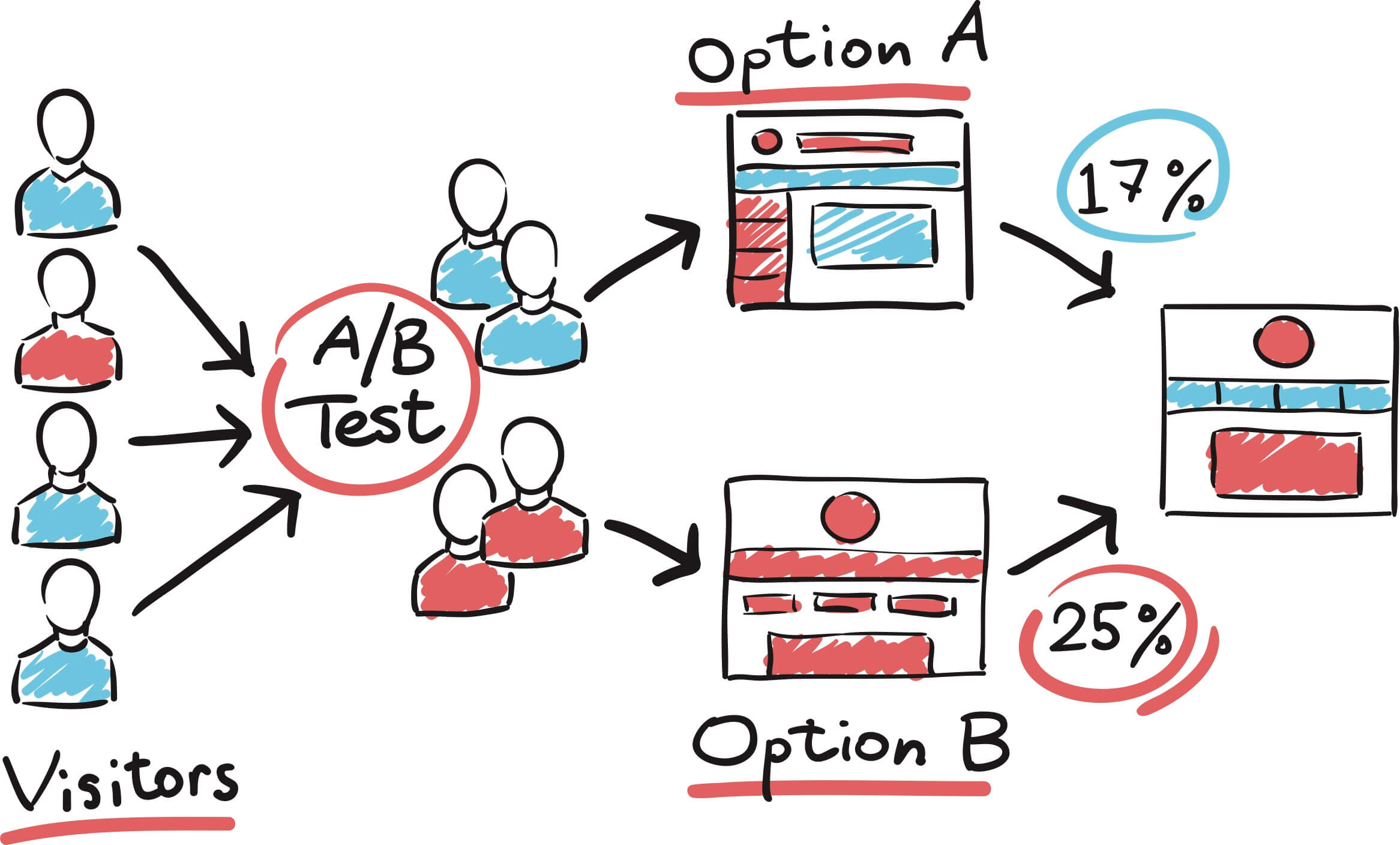

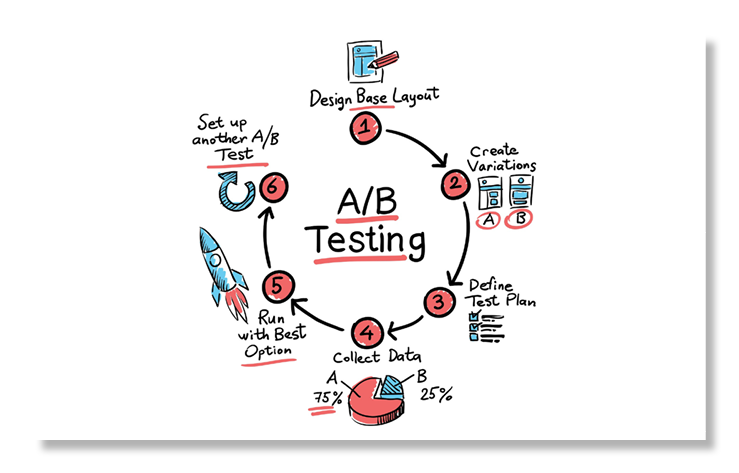

This is a randomised experiment in which we compare and evaluate the performance of different versions of a product in pairs. Both product versions are available in production (live) and randomly provided to different users. Data is being collected about the traffic, interaction, time spent and other relevant metrics, which will be used to judge the effectiveness of the two different versions based on the change in user’s behaviour. The test determines which version is performing better in terms of the target outcomes you have started with.

Why Do Split Testing - A/B Testing?

Simple to apply, fast to execute and often conclusions can be made simply by comparing the conversion/activity data between the two versions. It can be limiting as the two versions should not differ too much and more significant changes in the product may require a large number of A/B tests to be performed.

This is one of the practices that allow you to “tune the engine” as per The Lean Startup by Eric Ries.

The practice provides an objective criteria for important decisions on the product design, features, behaviour, etc. It is used as a way to enhance the product performance, the user experience and the results the product produce for your organization.

How to do Split Testing - A/B Testing?

You can compare two or more different versions of an application/product as long as you always do that in pairs. The pairs need to be identical except for a single characteristic which will vary between the two versions.

The team should take care with regards to:

- Differentiating the users, e.g. new users from returning users (cohorts).

- Comparing results - the results may not always point a significant difference between the versions and may either need to be run longer or different combinations of pairs should be used.

- Running the test always simultaneously as multiple factors may vary with time and influence the results.

Look at Split Testing - A/B Testing

Links we love

Check out these great links which can help you dive a little deeper into running the Split Testing - A/B Testing practice with your team, customers or stakeholders.